If you’re behind on what’s happening with the robot uprising, have no fear. Here’s a quick look at some of the weirdest and wildest artificial intelligence news from the past week. Also, don’t forget to check out our weekly AI write-up, which will go into more detail on this same topic.

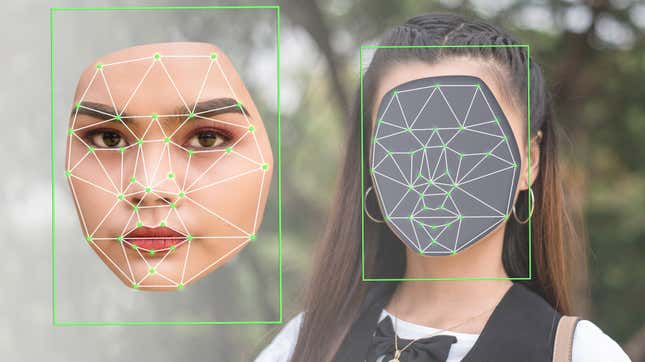

We’ve known for quite some time that AI is going to make the world’s disinformation crisis soooo much worse. A new study, published this week, reaffirms that suspicion.The research hones in on the role deepfakes are playing combat zones—like Ukraine—and the results are decidedly not good.

Riley Reid, porn star and entrepreneur, has launched a new platform, Clona.ai, that uses AI chatbots that were built with the content and consent of real adult actresses. Sorta like ChatGPT…but with porn.

Researchers recently created a tool to help human artists fight back against AI companies that would use their work to train their content-generating algorithms. Enter “Nightshade,” a software that artists can use to “poison” large language models that attempt to ingest their art. While “Nightshade” might be a nice stopgap, it’s really not the solution the creative industry needs. Instead, we need laws that regulate the use of copyrighted material by AI algorithms.

Tech giants like Google, Microsoft, and OpenAI keep claiming that their AI technology could pose an existential risk to the planet and that it needs to be regulated ASAP. However, the amount of money that those companies are apparently willing to invest in researching adequate regulations is as low as $10 million. Kyle Barr has the full story.

The United Nations has formed a new 39-member advisory body that will churn out some regulatory suggestions that other countries can look to while trying to navigate the technological upheaval spurred by new AI platforms.