Apple is widely expected to introduce its long rumored mixed reality headset as part of WWDC 2023. This comes as a surprise to few in part because Apple has been singing the praises of augmented reality since at least WWDC 2017. That’s when Apple began laying the groundwork for technology used in the headset through developer tools on the iPhone and iPad.

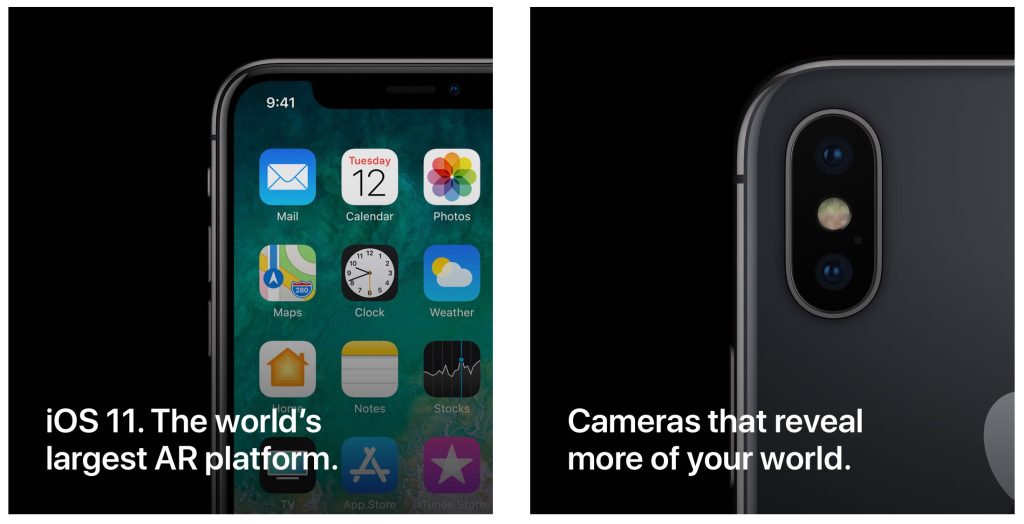

That’s when Apple first introduced its ARKit augmented reality framework that helps developers create immersive experiences on iPhones and iPads.

ARKit was such a focus for Apple in the years that followed that it dedicated much of its last live keynotes to introducing and demonstrating new AR capabilities. Who could forget the sparse wood tabletops that served as surfaces for building virtual LEGO sets on stage?

By emphasizing these tools, Apple communicated the importance of augmented reality technology as part of the future of its platforms.

iPhone and iPad software isn’t the only thing that started being designed for a mixed reality future. iPhone and iPad hardware similarly became more equipped to serve as portable windows into an augmented reality world.

Starting with Face ID and Apple’s Animoji (and later Memoji) feature, Apple began tuning the iPhone for AR capabilities. Internally, Apple tailored the iPhone’s Neural Engine to handle augmented reality without a sweat.

The main camera on iPhones even added a dedicated LiDAR sensor like lunar rovers navigating the surface of the Moon and driverless cars reading their surroundings.

There was even an iPad Pro hardware update that almost entirely focused on the addition of a LiDAR scanner on the back camera.

Why? Sure, it helped with focusing and sensing depth for Portrait mode photos, but there were also dedicated iPad apps for decorating your room with virtual furniture or trying on glasses without actually having the frames.

What’s been clear from the start is that ARKit wasn’t entirely intended for immersive experiences through the iPhone and iPad. The phone screen is too small to truly be immersive, and the tablet weight is too heavy to sustain long periods of use.

There’s absolutely use for AR on iPhones and iPads. Catching pocket monsters in the real world is more whimsy in Pokémon GO than in an entirely digital environment. Dissecting a virtual creature in a classroom can also be more welcoming than touching actual guts.

Still, the most immersive experiences that truly trick your brain into believing that you’re actually surrounded by whatever digital content your seeing requires goggles.

Does that mean everyone will care about AR and VR enough to make the headset a hit? Reactions to AR on the iPhone and iPad has, at times, been that Apple is offering a solution in search of a problem.

Still, there are some augmented reality experiences that are clearly delightful.

Want to see every dimension of the announced but unreleased iPhone or MacBook? AR is probably how a lot of people experienced the Mac Pro and Pro Display XDR for the first time.

Projecting a virtual space rocket that scales 1:1 in your living room will also give you a decent idea of the scale of these machines. Experiencing a virtual rocket launch that lets you look back on the Earth as if you were a passenger could also be exhilarating.

Augmented reality has also been the best method for introducing my kids to dinosaurs without risking time travel and bringing the T-Rex back to present day.

As for ARKit, there are a number of ways that Apple has been openly building tools that will be used for headset experience development starting next month.

For starters, the framework introduced a way to provide developers with tools, APIs, and libraries needed to build AR apps in the first place. Motion tracking, scene detection, light sensing, and camera integration are all necessary to introducing AR apps.

Real world tracking is another important factor. ARKit introduced the tools needed to use hardware sensors like the camera, gyroscope, and accelerometer to accurately follow the position of virtual objects in a real environment through Apple devices.

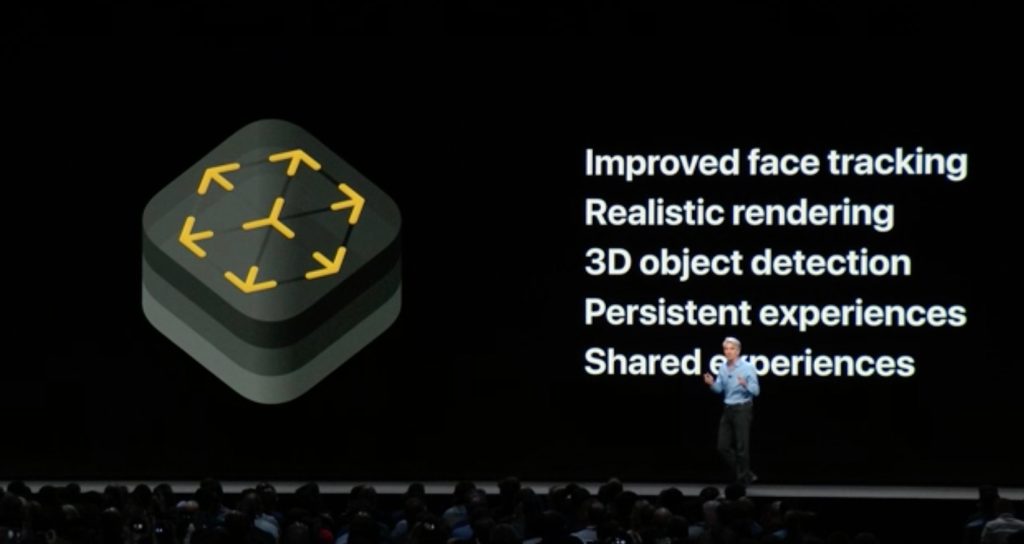

Then there’s face tracking. ARKit allows developers to include the same face tracking capabilities that Apple uses to power Animoji and Memoji with facial expression mirroring.

AR Quick Look is another technology referenced earlier. This is what AR experiences use to put virtual objects like products in the real environment around you. Properly scaling these objects and remembering their position relative to your device helps create the illusion.

More recent versions of ARKit have focused on supporting shared AR experiences that can remain persistent between uses, detecting objects in your environment, and occluding people from scenes. Performance has also steadily been tuned over the years so the core technology that powers virtual and augmented reality experiences in the headset should be pretty solid.

We expect our first official glimpse of Apple’s headset on Monday, June 5, when Apple kicks off its next keynote event. 9to5Mac will be in attendance at the special event so stay tuned for comprehensive, up-close coverage. Best of luck to the HTC Vives and Meta headsets of the world.

Add 9to5Mac to your Google News feed.

FTC: We use income earning auto affiliate links. More.